Apache Spark: Unified Engine for Analytics

Apache Spark is a unified analytics engine built for speed, scale, and intelligent data workloads — a core component that also powers our Databricks Data and AI platform for scalable enterprise analytics.

It powers large-scale data engineering, streaming analytics, and machine learning on clusters or cloud platforms, processing petabyte-scale data up to 100x faster than traditional frameworks.

By centralizing SQL, real-time data pipelines, and artificial intelligence (AI) workloads in one engine, Apache Spark helps organizations reduce infrastructure costs, accelerate insight delivery, and unlock higher ROI from their data investments.

The Engine for Scalable Computing

Apache Spark is a multi-language engine for executing data engineering, data science, and machine learning (ML) on single-node machines or clusters.

At its core, Spark provides a distributed execution engine—Spark Core—that manages parallel computation, resilient workloads, and high-throughput data operations. Its adaptive query execution, ANSI SQL support, and optimized DataFrame API streamline analytics while improving performance and reducing runtime costs.

On top of this foundation, Spark delivers an integrated suite of libraries: Spark SQL for structured and unstructured data, Structured Streaming for real-time pipelines, MLlib for scalable machine learning, and GraphX for graph computation.

Together, these capabilities allow enterprises to unify batch, streaming, and ML workloads in a single high-performance platform, accelerating insight delivery across the modern data ecosystem.

Key Features & Capabilities

Apache Spark delivers a single engine for high-performance analytics, real-time pipelines, and large-scale machine learning. Its flexible design gives organizations the flexibility to process any data type—structured, semi-structured, or streaming. It maintains speed, reliability, and enterprise controls and is one of the core data technologies we use to build scalable, modern analytics solutions.

-

Batch & Streaming Data: Process batch and real-time streaming data in one engine using Python, SQL, Scala, Java, or R, enabling consistent logic across ETL workflows, streaming jobs, and analytics pipelines.

-

SQL Analytics: Run fast, distributed ANSI SQL queries for dashboards, business intelligence (BI) workloads, and ad-hoc analysis, delivering sub-second performance that outpaces many traditional data warehouses.

-

Data Science at Scale: Perform exploratory data analysis on massive datasets without downsampling by using optimized DataFrames and Spark’s high-performance execution engine for faster experimentation.

-

Machine Learning: Build ML models locally and scale the same code to fault-tolerant clusters, training classification, regression, clustering, and recommendation algorithms at enterprise scale.

Business Benefits

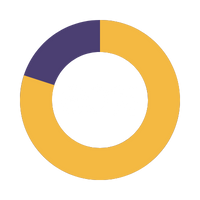

Apache Spark is the most widely used engine for large-scale computing. It is trusted by thousands of companies—including 80% of the Fortune 500—and supported by more than 2,000 open-source contributors from both industry and academia.

This widespread use highlights Spark’s ability to speed up data engineering, analytics, and machine learning. It also strengthens operational efficiency and delivers clear ROI.

Its in-memory computing and efficient processing enable up to 100× faster processing. This reduces infrastructure costs and allows organizations to move from raw data to insights with high speed.

Spark also boosts developer efficiency through unified APIs for SQL, streaming, data science, and machine learning. This helps teams build and scale applications faster with less code complexity.

Its cluster scaling supports petabyte-level workloads. Open-source innovation continuously enhances reliability and performance.

By consolidating multiple workloads into a single engine, Spark minimizes operational effort. It also ensures consistency across data engineering, analytics, and ML pipelines, making it a powerful foundation for a modern, high-performance data environment.

Apache Spark Use Cases Across Industries

Organizations use Apache Spark to simplify complex data processing at scale. It supports everything from small interactive analyses to large distributed workloads.

Spark’s single engine enables teams to handle structured and unstructured data through DataFrames, SQL, Structured Streaming, and Resilient Distributed Datasets (RDDs). This makes it suitable for a wide range of analytical and operational scenarios.

-

Interactive Analytics: Teams use Spark DataFrames for fast data exploration, transformations, and aggregations without mutating source datasets.

-

SQL-Based Reporting: Analysts query DataFrames directly with SQL, persist results using Parquet tables, and run advanced aggregations without switching tools.

-

Real-Time Pipelines: Structured Streaming powers continuous ingestion from sources like Kafka, enabling automated hourly or event-driven updates.

-

Unstructured Data Processing: RDDs support text processing and parallel computation for scenarios requiring full control over low-level data operations.

Integrations & Compatibility

Apache Spark integrates seamlessly with a broad ecosystem of data science, analytics, and infrastructure systems. This makes it easy to scale existing workflows to thousands of machines.

Spark connects natively with leading data science and machine learning libraries such as scikit-learn, Pandas, TensorFlow, PyTorch, MLflow, R, and NumPy.

For SQL analytics and Business Intelligence, Spark works with Superset, Power BI, Looker, Tableau, Redash, and dbt.

Its compatibility extends to storage and infrastructure systems including Elasticsearch, MongoDB, Kafka, Delta Lake, Kubernetes, Parquet, SQL Server, Cassandra, and Apache ORC.

brs + Apache Spark = Large-Scale Data Analytics

brs uses Apache Spark to accelerate data engineering, analytics, and machine learning across modern cloud ecosystems.

By unifying batch, streaming, and ML workloads in one high-performance engine, brs helps organizations build faster pipelines, reduce operational complexity, and scale analytics with confidence—all while maximizing the value of their existing data platforms and investments.

Build High-Performance Data Pipelines with Confidence

With Apache Spark and brs, organizations across Canada and North America modernize workflows, reduce complexity, and accelerate decision-making.

Turn Your Data into Insights.

%20Logo.png?width=100&height=100&name=brs%20(Bow%20River%20Solutions)%20Logo.png)